introduction

In the realm of concurrent programming, a fundamental understanding of processes and threads is crucial. Let's delve into this with a focus on logical processors.

Each logical processor in a system can execute one thread at a time ( we will see the difference between physical and logical processors in a while).

For example, a system with 8 logical processors is theoretically capable of running 8 threads in parallel. However, this concept is nuanced due to the rapidity of context switching, which allows a single processor to handle multiple threads by switching between them efficiently.

Now processes, essentially programs in execution, spawn threads. These threads, in turn, are capable of creating additional threads, forming a multi-threaded environment. These are OS-level threads, entirely managed by the operating system, and they form the backbone of traditional concurrent execution.

Now, Go introduces an innovative approach to concurrency: goroutines. A goroutine is a lightweight thread of execution, managed not by the operating system but by the Go runtime itself. This management style offers a significant departure from traditional thread handling, providing both efficiency and reduced overhead.

( It's important to note that this article doesn't aim to cover the basics of what goroutines are or their creation process. For those new to goroutines, a practical example can be found here: Go by Example: Goroutines. )

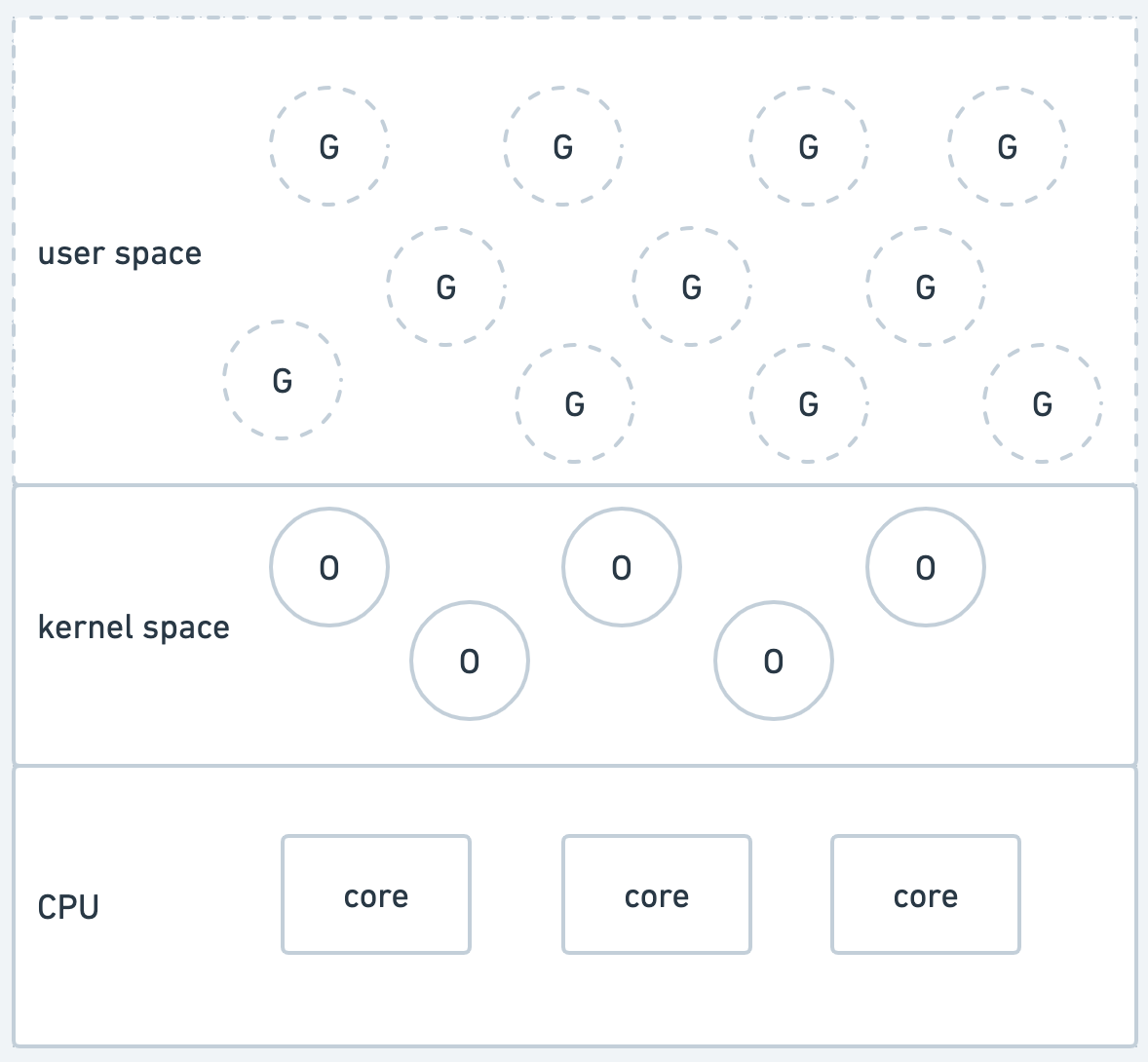

Goroutines operate in the user space, offering a more efficient and manageable approach to concurrency.

OS-level threads reside in the kernel space, governed by the operating system's scheduling and management mechanisms.

CPU cores, the underlying hardware layer, execute the threads or goroutines scheduled by the respective managing entities.

go runtime

The Go runtime is a powerhouse underpinning the execution of Go programs, encompassing several vital components. These components work behind the scenes, much like the engine of a car, powering the language's capabilities and ensuring smooth operation. Among these components, we find:

Garbage Collection: This is Go's way of efficiently managing memory. It automatically frees up memory that's no longer in use, preventing memory leaks and optimizing performance.

Goroutine Scheduler: Here lies the core of concurrent execution in Go. The scheduler manages goroutines, juggling which ones should be active at any given moment

and much more.

go scheduler

The Go scheduler, in particular, is an integral part of this runtime machinery. It's responsible for orchestrating the execution of goroutines and is where the magic of concurrency in Go really happens. Here's a closer look at what the scheduler does:

Managing Goroutines: The scheduler decides which goroutines should run and when. It's like a conductor ensuring each musician (goroutine) plays at the right moment in an orchestra.

Multiplexing on OS Threads: Go uses a model often referred to as M:N multiplexing. It means the scheduler takes multiple goroutines and efficiently runs them on a smaller number of OS threads. This is a key aspect of why goroutines are more lightweight compared to traditional threads.

Cooperative Scheduling: Unlike some systems where the OS preempts threads to manage multitasking, Go's scheduler uses a cooperative model.

This means that goroutines yield control back to the scheduler at specific points, like during I/O operations or network calls. This approach avoids the overhead associated with preemptive scheduling and allows for more efficient task management.

(However, as an exception to this rule, in lates Go versions, the scheduler includes a form of preemptive scheduling to handle long-running goroutines, ensuring no single goroutine can monopolize the CPU for extended periods.)

how scheduling works

logical processors

A key aspect of Go's scheduling mechanism is its interaction with the logical cores of your system. Here's a breakdown of how this works:

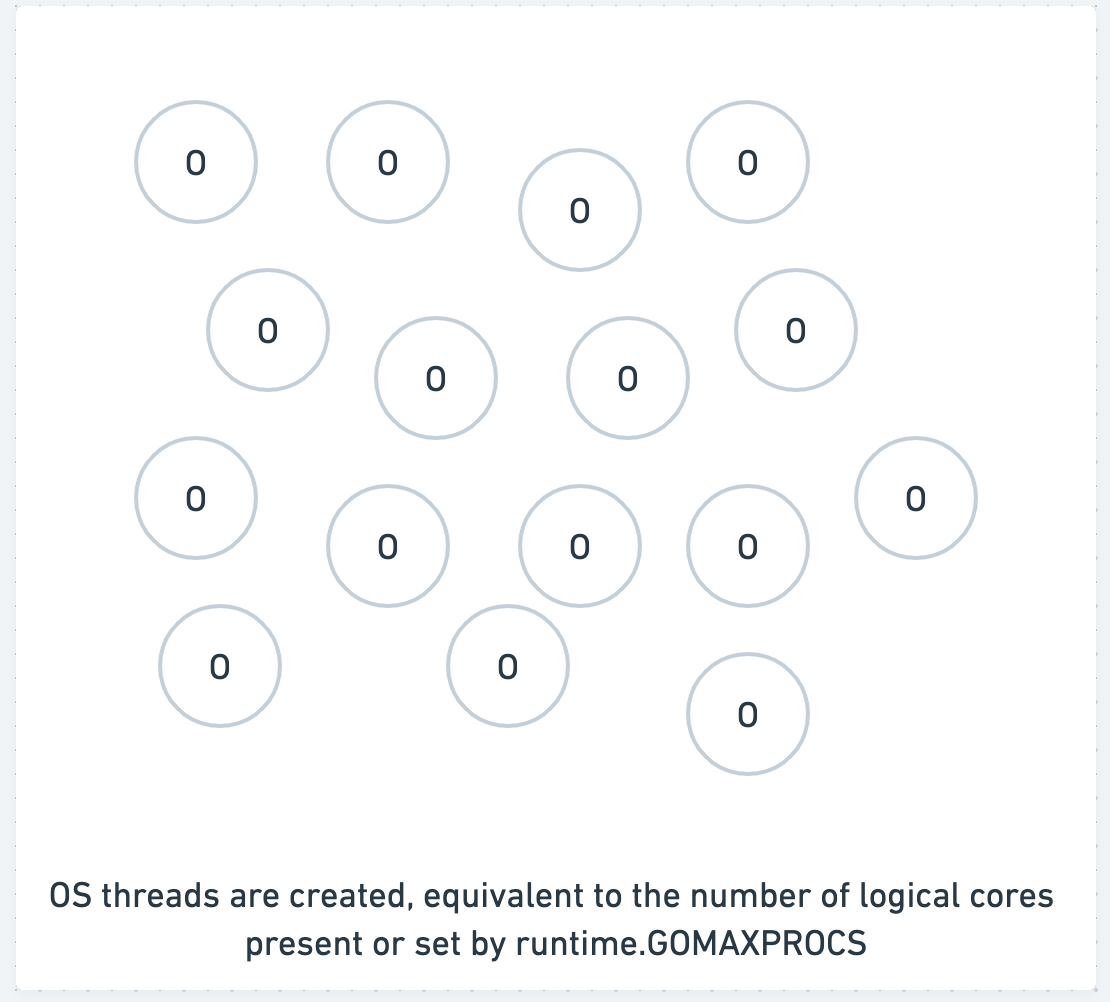

Launching OS Threads: When you start a Go program, the runtime initiates a number of OS threads that is equal to the number of logical processors available to it.

- These OS threads are entirely managed by the operating system. This management includes tasks like creating the threads, blocking them, and handling their waiting states.

What are logical processors: To grasp this concept, it's essential to define what we mean by 'logical cores/processors.' The formula is quite straightforward:

logical cores = number of physical cores * number of threads that can run on each core (hardware threads)for example on my mac, I have 8 physical cores.

and it can run 2 hardware threads per core, so I have a total of 16 logical cores.

Determining the Number of Logical Processors: Go provides a handy method to find out the number of logical processors on your system:

runtime.NumCPU().When you run a program like the one below, it tells you how many logical processors (or threads) your Go program can potentially run concurrently.

package main import ( "fmt" "runtime" ) function main() { fmt.Println("Number of CPUs:", runtime.NumCPU()) }If the output is something like:

Number of CPUs: 16This indicates that I have 16 logical processors available, meaning 2 hardware threads can run on each physical processor.

M:N multiplexing

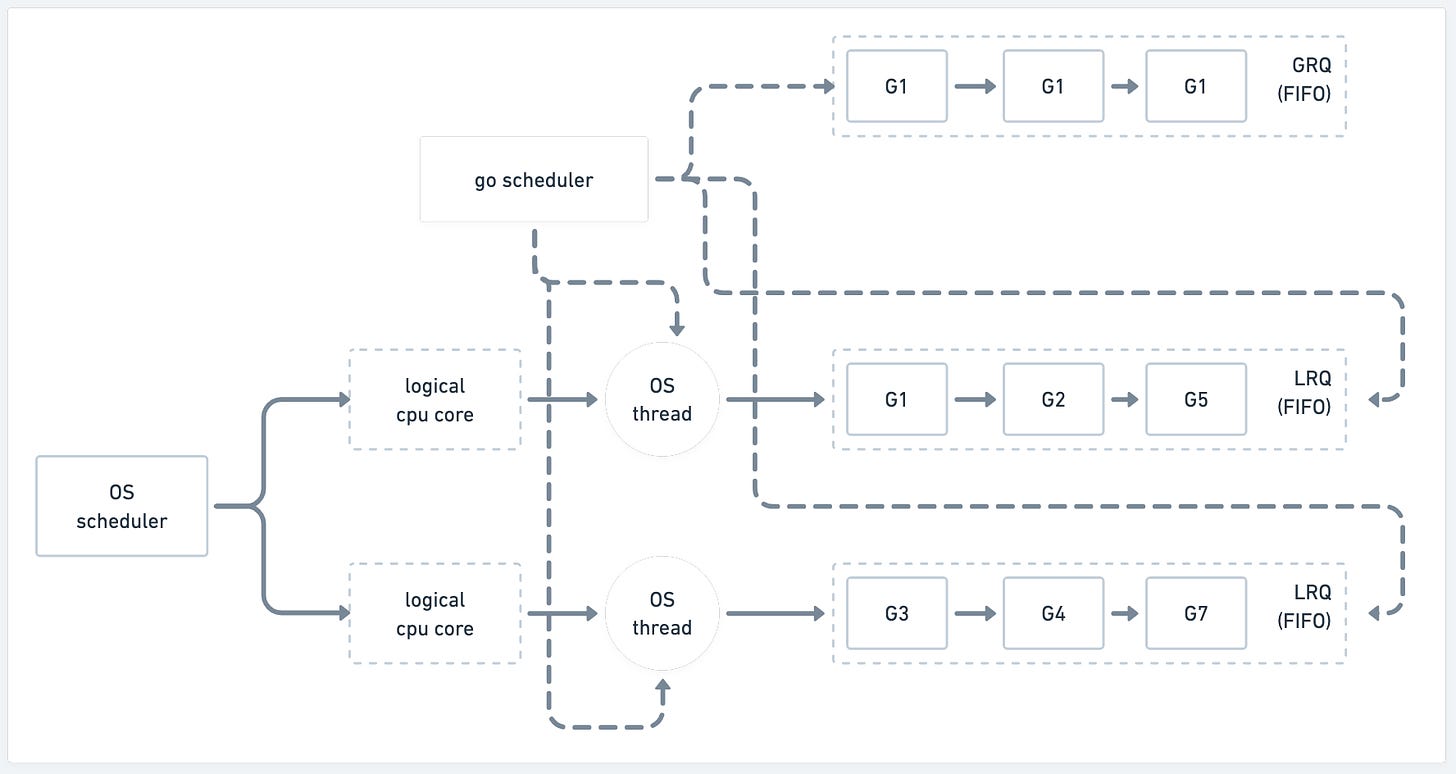

M:N multiplexing in the context of the Go scheduler refers to the model where multiple goroutines (M) are multiplexed onto a smaller number of OS threads (N).

Setting the Scene: OS Threads and Logical Cores

When a Go program begins, the Go runtime initializes a set of OS threads corresponding to the number of logical cores detected on the machine.

Each of these threads will have its own Local Run Queue (LRQ) for goroutines.

The introduction of LRQs was a strategic move by Go to decentralize goroutine management. By assigning an LRQ to each OS thread, Go minimized the contention that a singular global queue would face, thus streamlining concurrent execution.

The Role of the Global Run Queue (GRQ)

The GRQ plays a pivotal part in this narrative.

It holds the goroutines that are ready for action but haven't yet been assigned to an LRQ.

The Go scheduler periodically scans the GRQ, dispatching goroutines to various LRQs. This mechanism ensures a fair and balanced distribution of work among the available OS threads.

Drama of Parking and Unparking OS Threads

In Go's grand concurrency theater, there's a subtle yet pivotal act known as the parking and unparking of OS threads, a strategy that showcases Go's efficiency in managing system resources.

The Art of Parking:

Imagine a scenario where the stage – our CPU – has no immediate role for some of its actors, the OS threads. Instead of sending them away (terminating the threads), Go's runtime opts for a more economical approach: it 'parks' these threads.

Parking is akin to having the actors wait in the green room; they're not actively performing, but they're ready to jump back into action at a moment's notice.

During this parking, the thread's current state is saved, and it enters a low-resource-consuming sleep mode, awaiting its next performance.

Why Parking Matters:

Creating and destroying OS threads is resource-intensive – it's like continually hiring and firing actors for each scene, an inefficient use of time and resources.

By parking idle threads, Go keeps them in a state of readiness. This approach is far more efficient than the constant overhead of creating and destroying threads.

The Unparking Cue:

When new goroutines are ready to run, Go's runtime 'unparks' the relevant threads, summoning them back to the CPU to take up their roles.

This unparking process involves waking the thread from its sleep state, restoring its saved state, and assigning it new goroutines to execute.

It's like calling actors back to the stage when it's their turn to perform, ensuring a smooth and continuous play.

Optimization and Responsiveness:

This mechanism of parking and unparking is a testament to Go's optimized use of system resources.

It not only saves the cost of thread management but also ensures that the runtime can quickly respond to new tasks.

The threads are always in a state of near-readiness, minimizing the latency that would be involved in thread creation.

Work Stealing: The Twist in the Tale

Work stealing is a thrilling twist in Go's concurrency narrative. Imagine an OS thread runs out of goroutines in its LRQ. Instead of sitting idle, it turns to its peers' LRQs or even the GRQ, 'stealing' a goroutine to keep itself busy.

This strategy is ingenious: it ensures that all OS threads are gainfully employed, maximizing CPU utilization and preventing any thread from becoming a performance bottleneck.

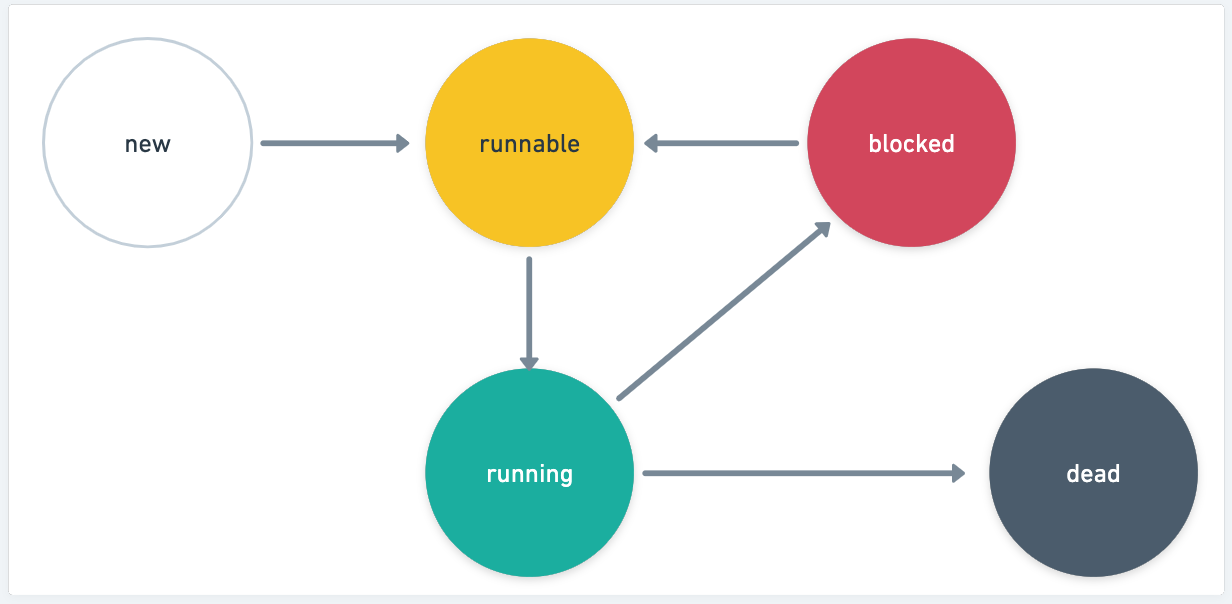

Goroutine States

goroutine states are akin to different phases of activity, each crucial for the seamless execution of concurrent tasks.

Runnable:

This is the preparatory phase.

Goroutines in the Runnable state are queued and ready for execution.

They are like actors in the wings, scripts in hand, awaiting their cue to step onto the stage (i.e., an OS thread).

Running:

When a goroutine enters the Running state, it's actively executing code on an OS thread.

This is the spotlight moment, where the goroutine is performing its designated task, utilizing CPU resources.

Blocked:

A goroutine enters the Blocked state when it's waiting for external events like I/O operations, network communication, or synchronization events (like waiting on a channel).

While Blocked, the goroutine steps out of the limelight and does not consume CPU resources. It's like an actor pausing backstage, waiting for the next scene.

Incorporating Syscalls and I/O Calls

goroutine states are not just about readiness or execution; they also intricately handle scenarios involving system calls (syscalls) and I/O operations.

Syscalls and I/O in the Blocked State:

When a goroutine executes a syscall or an I/O operation, it often transitions to the Blocked state.

This is because such operations typically wait for external resources or events, like reading from a disk or waiting for a network response.

While a goroutine is Blocked waiting for these operations to complete, it relinquishes the CPU, allowing other goroutines to run.

Efficient Handling of Blocking Operations:

When a goroutine blocks on a syscall or an I/O operation, the Go scheduler can take this opportunity to schedule another goroutine on the same thread.

This design ensures that the CPU is not left idle, thus optimizing resource utilization.

Glimpse into Go's Scheduling: Using the Tracing Tool

To bring the concepts of Go's concurrency model to life, let's dive into a hands-on demonstration using Go's built-in tracing tool. This tool allows us to visually inspect how goroutines are scheduled and managed by the Go runtime.

I’ll be using the following code:

package main

import (

"fmt"

"os"

"runtime"

"runtime/trace"

"sync"

"time"

)

// Define functions fn1 through fn5, each performing simple operations // and printing results.

func fn1(wg *sync.WaitGroup) {

defer wg.Done() // Signal completion of goroutine

fmt.Println("Executing function 1")

}

func fn2(wg *sync.WaitGroup) {

defer wg.Done()

sum(1, 2) // Calls a function to calculate the sum

fmt.Println("Executing function 2")

}

func fn3(wg *sync.WaitGroup) {

defer wg.Done()

subtract(2, 1) // Calls a function to calculate the difference

fmt.Println("Executing function 3")

}

func fn4(wg *sync.WaitGroup) {

defer wg.Done()

multiply(2, 2) // Calls a function to calculate the product

fmt.Println("Executing function 4")

}

func fn5(wg *sync.WaitGroup) {

defer wg.Done()

divide(4, 2) // Calls a function to calculate the division

fmt.Println("Executing function 5")

}

// Define arithmetic operations: sum, subtract, multiply, divide

func main() {

var wg sync.WaitGroup

runtime.GOMAXPROCS(3) // Limit the number of OS threads

// Create and open a file to store trace data

f, err := os.Create("trace.out")

if err != nil {

panic(err)

}

defer f.Close()

// Start tracing

err = trace.Start(f)

if err != nil {

panic(err)

}

defer trace.Stop()

// Adding 5 goroutines to the WaitGroup

wg.Add(5)

// Starting each function as a goroutine

go fn1(&wg)

go fn2(&wg)

go fn3(&wg)

go fn4(&wg)

go fn5(&wg)

// Waiting for all goroutines to complete

wg.Wait()

// Stop tracing

fmt.Println("All goroutines completed")

}

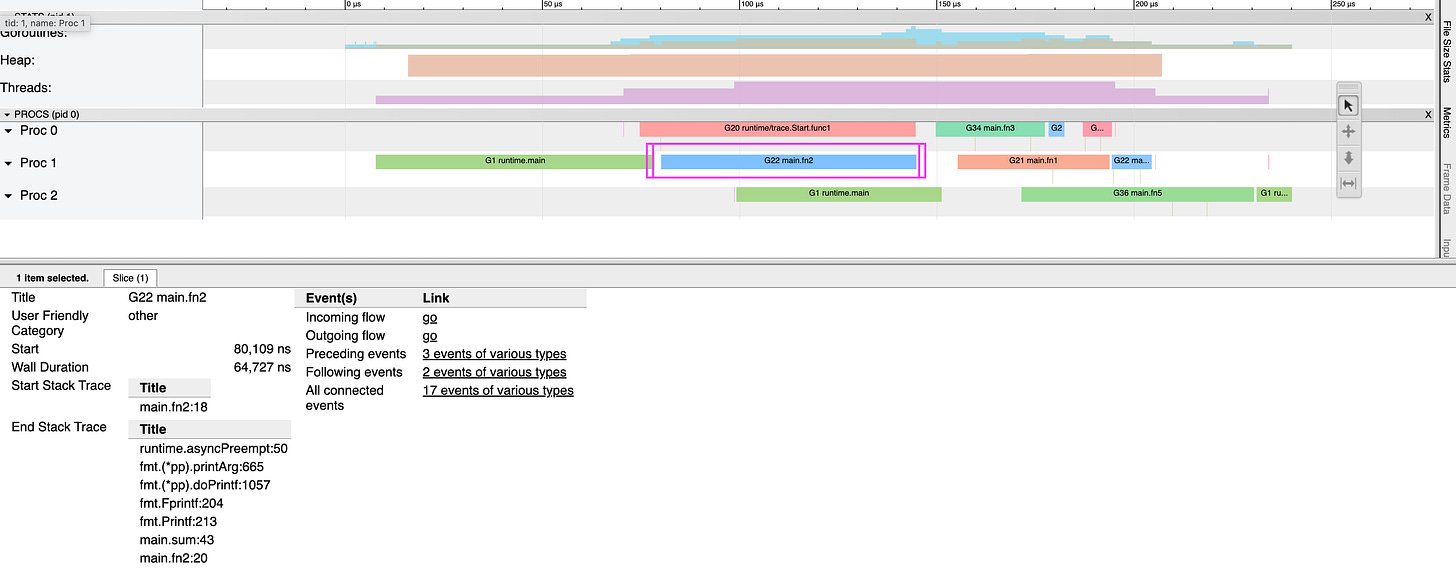

After running this program, a trace file named trace.out is generated. Analyzing this file with Go's trace tool (go tool trace trace.out) provides a visual representation of the scheduling and execution of these goroutines.

For example here:

G22 main.fn2- This indicates the trace is for the goroutine labeled '22', executing the functionmain.fn2.Start and End Stack Trace: These show the function call sequence and the line numbers in the code, providing insights into what the goroutine was executing at that time.

Start trace was line 18, which is

func fn2(wg *sync.WaitGroup)

- End trace:

A series of function calls leading up to the end of the trace segment.

runtime.asyncPreempt:50: Indicates an asynchronous preemption by the Go runtime at this point in the code. Asynchronous preemption in Go is used to stop a goroutine, allowing the scheduler to run other goroutines. It's part of Go's strategy to efficiently manage CPU time among goroutines.This stack trace essentially shows the sequence of function calls that occurred during the execution of

main.fn2, ending with a call tofmt.Printfwithin themain.sumfunction.