Live Streaming: From Camera Lens to Your Screen

We all enjoy using platforms like Hotstar, YouTube, and Jio Cinema to immerse ourselves in the exciting world of live streaming, whether it’s for sports, movies, or other entertainment.

Now, accessing and viewing content over the internet can be done in several ways:

Downloading is when you completely transfer the content to your device before viewing it.

Streaming involves the transmission of data in a continuous flow, which can be video, music, or gaming content. This content is mostly pre-recorded.

Live streaming is a special form of streaming where the content is broadcasted at the exact moment it is being recorded.

In this blog, we're going to dive into the intricate process of how live streaming functions. However, it's essential to first build a foundation of understanding around key networking and video concepts..

core concepts

- Network bandwidth:

Think of this as the breadth of your internet connection's capability

It is the maximum possible data transfer rate of a network or internet connection.

For example, if the bandwidth of a network is 40 Mbps, it implies that the network cannot transmit data faster than 40 Mbps in any given case.

- Network speed:

This is about how fast data actually travels in your network ( the actual rate at which data is transmitted)

It's influenced by a mix of factors, including how the data is sent (the protocol), your device’s ability to receive data (wired or wireless), and how well a server can send data to many users at once.

- Bitrate:

This measures how many bits (basic units of digital information) travel over the network every second.

For live streaming video, it's the rate at which video data is sent from the server to the internet. A higher bitrate means more data is sent each second, aiming for higher video quality but at the cost of using more bandwidth.

Take the example of a live sports match being broadcast. Encoder software transforms the live video into a streamable digital format. If this format is set to a bitrate of 5Mbps, it's sending 5 million bits of data every second to convey the live action.

Relying on a fixed bitrate can lead to challenges. If a broadcaster sets a single bitrate for all viewers, it can degrade the quality for those who might handle more (if the bitrate is set too low) or cause buffering issues for those with less robust internet connections (if the bitrate is set too high). This is where Adaptive Bitrate Streaming comes into play.

- Adaptive Bitrate Streaming:

It is a smart technology used in video streaming that makes sure you get the best possible picture quality when you watch videos online. Here's how it simplifies and improves your viewing experience:

ABR automatically adjusts the video quality based on your internet speed, the device you're using, and its screen size. This means you always get the clearest picture your setup can handle without interruption.

Videos are prepared in several versions (renditions) with different qualities and sizes. As you watch a video, ABR switches between these versions in real time, depending on how good your internet connection is at the moment.

The video is broken down into small pieces, allowing the technology to quickly and smoothly switch to the best version for your current situation.

Whether you’re on a slow mobile connection or a fast home network, ABR works to give you smooth, buffer-free viewing on any device, from phones to big-screen TVs.

- Frame Rate:

A video is a sequence of still frames that, when played at speed, create the illusion of continuous motion. The frame rate, measured in frames per second (fps), dictates how quickly these frames are displayed.

For live streaming, a minimum of 30 fps is recommended to achieve fluid motion. However, content with significant action, like sports, benefits from higher frame rates of 45 or 60 fps, offering viewers a more immersive and smooth experience.

It's important to note that a higher frame rate demands more bandwidth. Balancing the frame rate with available bandwidth is key to delivering quality streaming without excessive buffering.

- Video Resolution:

The resolution of a video is expressed as its width x height in pixels, determining the level of detail. For example, a 2560 x 1440 resolution means the video has 2560 pixels horizontally and 1440 pixels vertically.

The initial video quality is determined by the capturing camera, but the streaming resolution also heavily depends on the encoder settings, including bitrate and frame rate. Properly configuring these settings is vital for maximizing the stream's visual quality while managing bandwidth efficiently.

So now we know at least that streaming video involves more than just hitting "play."

Providers must consider viewers' varying internet speeds and devices, using technologies like Adaptive Bitrate Streaming (ABR) to adjust video quality in real-time. This ensures a smooth, high-quality viewing experience for everyone, no matter their connection or device.

Next, we'll break down what happens step by step to make this possible, keeping it concise and easy to understand.

Live Streaming Steps

Step 1: Video Capture

Live streaming starts with raw video data: the visual information captured by a camera.

Within the computing device to which the camera is attached, this visual information is represented as digital data – in other words, 1s and 0s at the deepest level.

At its core, the digital data now represents the captured image in terms of pixels, each pixel encoded with values that represent its color and brightness. These values are stored as binary data, which can be manipulated, compressed, and transmitted.

Step 2: Video Compression and encoding using codecs

Compression:

Video compression is the process of reducing the size of the video file without significantly compromising its quality. This is achieved by identifying and eliminating redundant data within the video frames, as well as applying various algorithms to reduce the amount of data required to represent the video.

For example, if the first frame of the video displays a person talking against a grey background, the grey background does not need to render for any subsequent frames that have the same background.

Encoding:

After compression, we turn the video into a format ready for streaming. This process is known as encoding. This is necessary because the original video format isn't optimized for being sent over the internet or played on different devices.

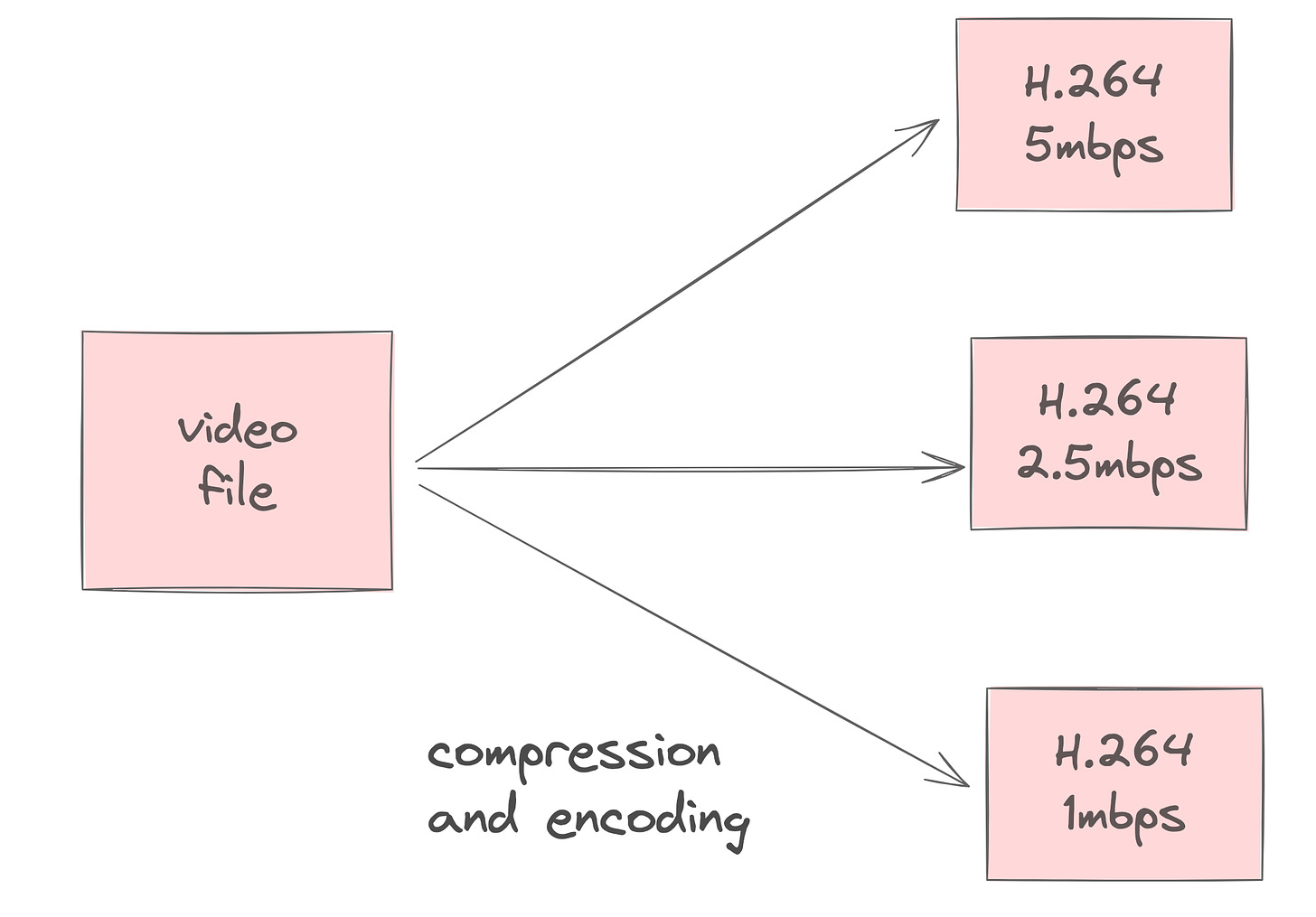

The Role of Codecs: Codecs are the tools/technologies we use for both compressing and encoding the video. They determine how to shrink the video and in what format to encode it. Popular codecs like H.264 are widely used because they strike a good balance between keeping the video quality high and the file size manageable. H.265, a newer codec, can compress videos even more without losing quality but requires more processing power.

Now, over here, the original video is encoded into multiple versions, each at a different bitrate and resolution. For example, a high-definition video might be encoded into a high-quality version at 5 Mbps, a medium quality at 2.5 Mbps, and a low quality at 1 Mbps. Each version is intended for different network speeds, from fast broadband to slower mobile data.

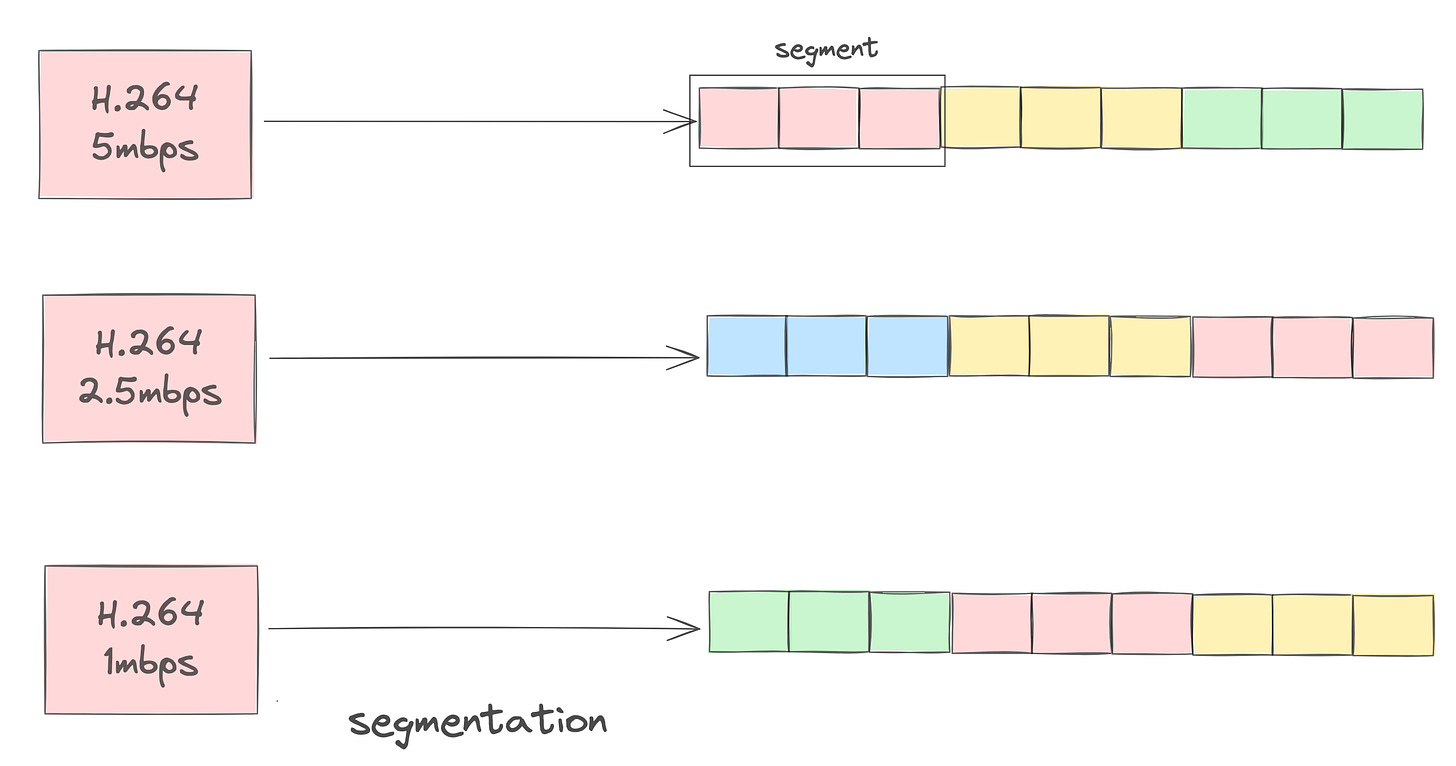

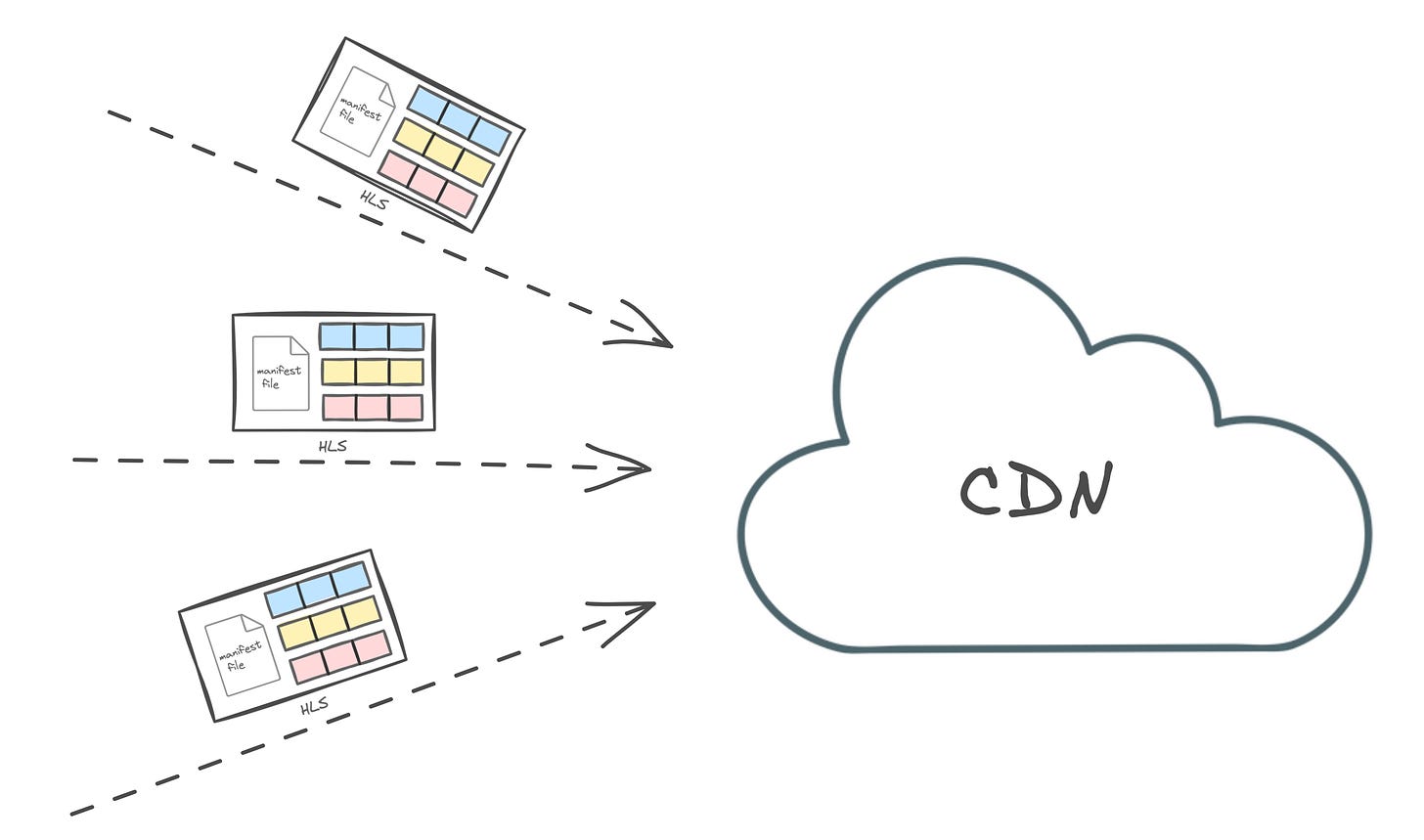

Step 3: Segmentation

Each bitrate version of the video is then segmented into short chunks, typically a few seconds long.

Quick Starts & Smooth Switching: Segmentation breaks the video into short chunks, allowing for rapid start times and smooth transitions between different video qualities, adapting in real time to the viewer's internet speed.

Enables Adaptive Bitrate Streaming: These chunks support Adaptive Bitrate Streaming (ABR), enabling the video player to select the optimal video quality at any moment, ensuring the best viewing experience with minimal buffering.

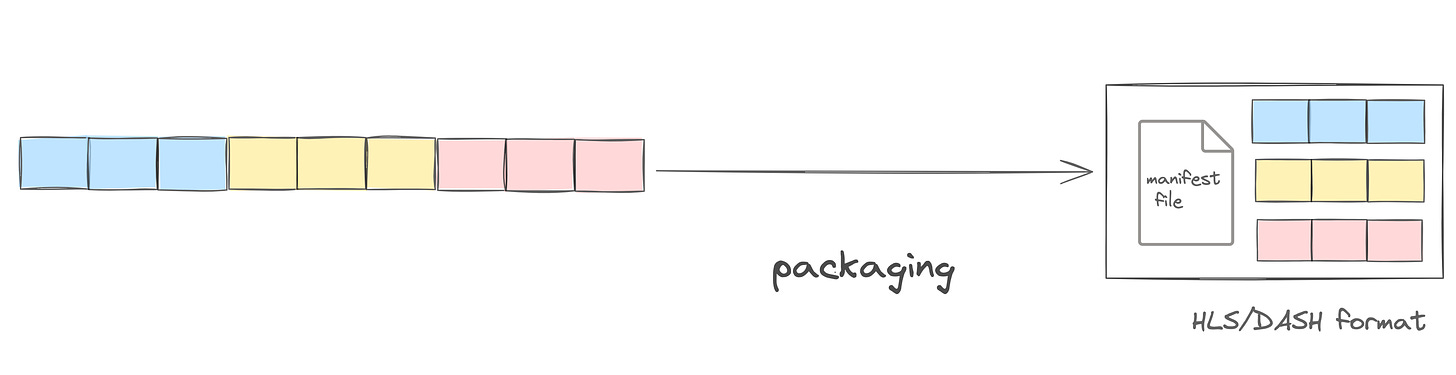

Step 4: Packaging

Wrapping Segments for Compatibility: After segmentation, the video chunks are packaged into streaming-friendly formats like MPEG-DASH, HLS, and Smooth Streaming. This ensures the video plays smoothly on any device or browser. Part of this process includes creating a manifest file, a crucial component that guides the video player on how to assemble and play the video stream.

The Role of the Manifest File: Think of the manifest file as the blueprint for the video stream. It details all the available video qualities, their resolutions, bitrates, and where the segments are located. This file is key to adaptive streaming, enabling the video player to intelligently switch between different video qualities based on how fast your internet is at any moment, ensuring a buffer-free viewing experience.

After preparing the video through compression, encoding, and packaging, the next steps ensure it reaches viewers smoothly and in high quality. Here's how the process continues:

Step 5: Delivery

Traditionally, Content Delivery Networks (CDNs) have been instrumental in caching and delivering static content (like images and videos) to users worldwide, minimizing latency by serving content from locations closest to the user. However, the role of CDNs has significantly evolved to encompass dynamic content—content that changes in real-time based on user interaction, location, or other factors.

- Using a Content Delivery Network (CDN): So our video, now ready for streaming, is distributed globally using a CDN. This network of servers minimizes delays by storing copies of the video close to viewers, ensuring fast and reliable access regardless of where they are in the world.

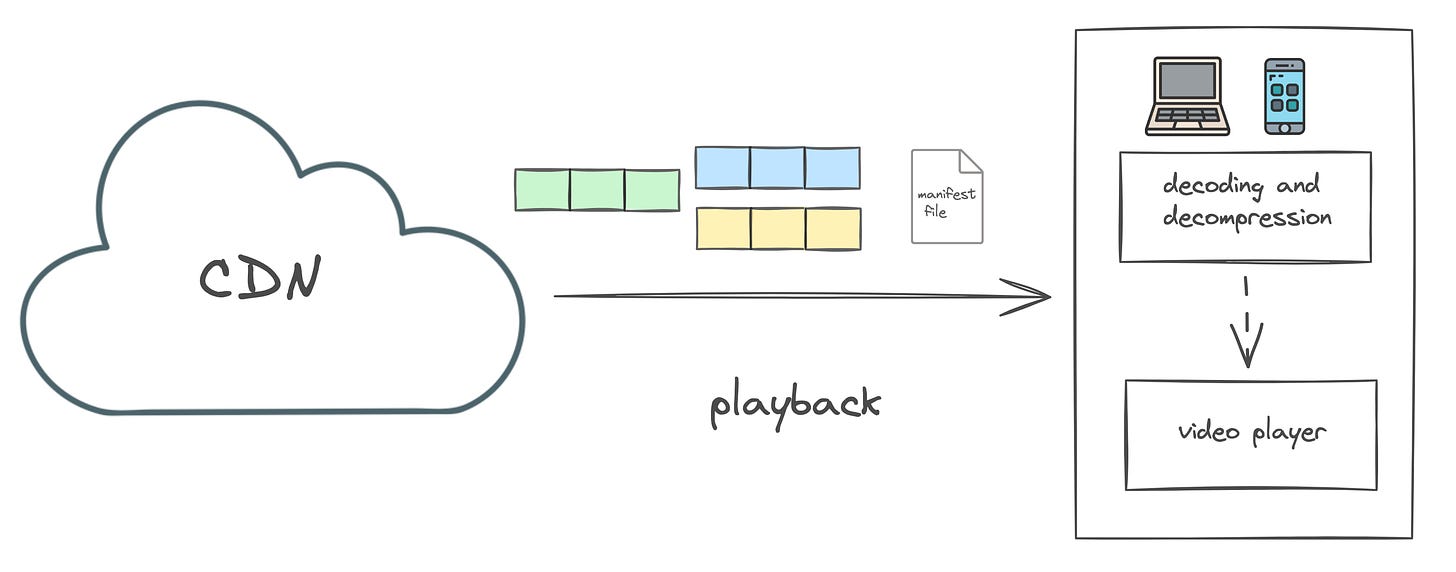

Step 6: Playback: The Final Step in Streaming

During playback, the entire process of preparing, packaging, and delivering the video culminates in the actual viewing experience on the viewer's device. Here's a detailed look at what happens:

Manifest File Retrieval: As soon as the viewer clicks play, the video player on their device contacts the server or CDN to download the manifest file. This file is crucial because it contains all the information the player needs to start the streaming process, including where the video segments are stored, available quality levels, and the sequence for playback.

Decoding and Decompression: With the manifest file guiding the way, the player begins to request, download, and display the video segments. As each segment arrives, it's decoded and decompressed by the video player. This step reverses the compression applied during encoding, transforming the data back into high-quality video frames for display.

Adaptive Streaming in Action: One of the key features of modern streaming is the video player's ability to adapt the video quality in real time based on the viewer's current internet connection. Using the information from the manifest file, the player selects the most suitable quality level for the current network conditions, ensuring smooth playback without buffering. If the network speed changes, the player responds by adjusting the video quality up or down, downloading different segments as necessary to match the new speed.

Viewer Interaction: Playback is interactive, allowing viewers to pause, rewind, or change the video quality manually, among other actions. This interactivity enhances the viewer's control over their viewing experience, making it more enjoyable and personalized.

In summary, playback is where the streaming experience comes to life for the viewer. Through the retrieval of the manifest file and the adaptive streaming capabilities of the video player, combined with decoding and decompression processes, viewers are provided with a seamless, high-quality viewing experience that adapts to their individual internet speeds and preferences.

I hope you enjoyed diving into the world of live streaming with me! It's a fascinating blend of tech and creativity that brings content to life, right on your screen.